By Michael Westerfield, Senior Solution Architect

Dual In-Line Memory Module (DIMM): RDIMMs versus LRDIMMs, which is better?

When Intel came out with E5-v2 CPUs, they introduced a new memory type called Load-Reduced DIMM (LRDIMM). At the time, servers had the ability to accept three different types of memory LRDIMM, Registered DIMM (RDIMM) and Unbuffered DIMM (UDIMM). Later, UDIMM memory was no longer used due to its lower bandwidth and capacity capabilities. This blog will focus on RDIMM and LRDIMM and when to use them.

We have encountered many clients that assume since the LRDIMM is the newest memory on the block, it must be the best memory to use in their new server. However, this is often not the case!

RDIMMs Are Typically Faster

Registered DIMMs improve signal integrity by having a register on the DIMM to buffer the address and command signals between each of the Dynamic Random-Access Memory (DRAMs) modules on the DIMM and the memory controller. This permits each memory channel to utilize up to three dual-rank DIMMs, greatly increasing the amount of memory the server can support. With RDIMMs, the partial buffering slightly increases both power consumption and memory latency.

I bet you are wondering what “dual-rank” means, so here is a description of “rank.” The rank of a DIMM is how many 64 bit chunks of data exist on the DIMM. You can think of single rank DIMMs as having DRAM on one side of the chip and thus have one 64 bit chunk of data. DIMMs with DRAM on both sides typically have a 64 bit chunk of data on each side and thus are dual rank. There is even a quad rank DIMM where each side of the DIMM has two 64 bit chunks of data.

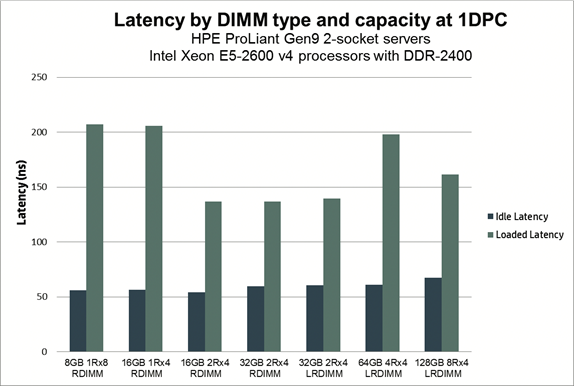

In order to compare these two memory types, there is a graph below detailing the difference in latency between RDIMMs and LRDIMMs on a Hewlett Packard Enterprise (HPE) Proliant Gen9 2 socket server using two Intel Xeon E5-2600v4 procs.

You will notice that the loaded latency for the RDIMMs with only a single rank (1Rx8) is actually higher than RDIMMs and LRDIMMs that have a higher capacity. That is because the single rank modules do not allow the processor to parallelize the memory requests from the CPU the way that modules with two or more ranks do.

There are a number of factors that influence memory latency in a system.

DIMM Speed

Faster DIMM speeds deliver lower latency, particularly loaded latency. Under loaded conditions, the greatest factor of increased latency is the time that memory requests spend in a queue waiting to be executed. The faster the DIMM speed, the more quickly the memory controller can process the queued commands. For example, memory running at 2400 Megatransfers per second (MT/s) has about 5% lower loaded latency than memory running at 2133 MT/s.

Ranks

For the same DDR4 memory speed and DIMM type, more ranks will typically increase the loaded latency. While more ranks on the channel give the memory controller a greater capability to parallelize the processing of memory requests and reduce the size of request queues, it also requires the controller to issue more refresh commands. The benefits of greater parallelizing outweighs the penalty of the additional refresh cycles up to four ranks. The net result is a slight reduction in loaded latencies for two to four ranks on a channel. With more than four ranks on a channel there is a slight increase in loaded latency.

CAS Latency

CAS (Column Address Strobe) latency represents the basic DRAM response time. It is specified as the number of clock cycles (e.g., 13, 15, 17) that the controller must wait after issuing the Column Address before data is available on the bus. CAS latency is a constant in both loaded and unloaded latency measurements (lower values are better).

Utilization

Increased memory bus utilization does not change the low level read latency on the memory bus. Individual read and write commands are always completed in the same amount of time regardless of the amount of traffic on the bus. However, increased utilization causes increased memory system latency due to latencies accumulating in the queues within the memory controller.

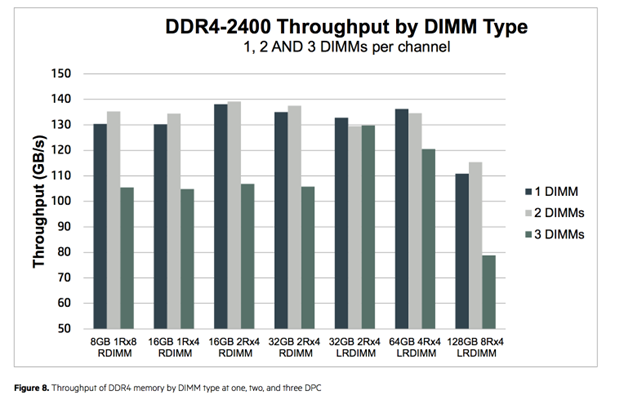

The actual throughput of the memory remains pretty consistent unless you use three DIMMs per Channel (DPC) or move to 128GB LRDIMMs

LRDIMMs Provide Better Capacity

LRDIMMs use memory buffers to consolidate the electrical loads of the ranks on the LRDIMM to a single electrical load, allowing them to have up to eight ranks on a single DIMM module. Using LRDIMMs you can configure systems with the largest possible memory footprints. However, LRDIMMs also use more power and have higher latencies compared to the lower capacity RDIMMs.

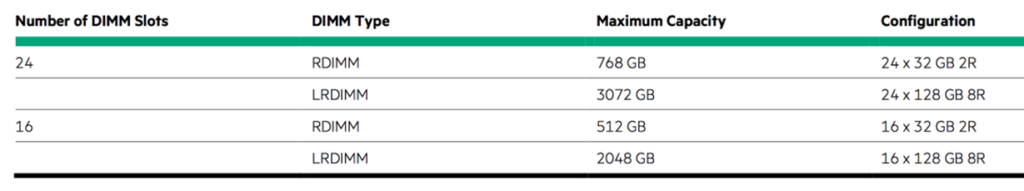

Below is a table showing the greater capacity that can be obtained using LRDIMM vs RDIMM.

As can be seen you can populate your servers with 4X the amount of memory by leveraging LRDIMM vs RDIMMs.

So, which is better?

As is often the case in the information technology industry, the answer to that question depends on your use case. If you’re looking to use DIMM modules that won’t exceed 32GB in size 90% of the time you should be using RDIMMs (which are less expensive than LRDIMMs). However, if your server architecture requires the use of DIMMs that are larger than 32GB go with LRDIMMs.

For optimal performance, the general guidelines are to populate your server with one or two RDIMMs per memory channel with two ranks each.

This post is an extract of the HPE Document: Overview of DDR4 memory in HPE ProLiant Gen9 Servers with Intel Xeon E5-2600 v3 – Best Practice Guidelines